Session (4)

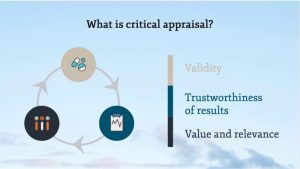

Step 3: Critical Appraisal

- It is reviewing the evidence to determine whether a research study/article is trustworthy, relevant, and impactful. We are checking the study for bias so we can make informed decisions about whether or not the research is valuable enough to change practice or consider in future research projects.

How do we do it?

- Start by identifying the type of evidence you have — that is the type of study you are evaluating: RCT, systematic review, cohort study, case study, etc. Once you know, you can use a checklist (see the list on the left) and start asking (and answering) questions.

- It might seem like a difficult skill to cultivate, but it gets easier with practice.

The goal is to think critically while reading and ask yourself:

- Why was study done?

- Did they answer their clinical question?

- What is the sample size and is it representative of a larger population?

- Are the instruments valid and reliable?

- Are the statistical tests appropriate to the study design?

- Are the results significant statistically and clinically?

- Are the conclusions drawn backed up by the data?

- Who funded the research? Any conflicts of interest?

Resources for Critical Appraisal

- Several institutes have created worksheets for critical appraisal based on the type of study. Once you have found an article that answers your PICO question, you can use the below resources to critically appraise your article.

- Critical Appraisal Skills Program (CASP) Checklists

- BMJ Best Practice Critical Appraisal Checklists

- Centre for Evidence-Based Medicine (CEBM) Critical Appraisal Tools

Critical appraisal starts by double-checking the following main sections:

I. Overview of the paper:

- The publishing journal and the year

- The article title: Does it state key trial objectives?

- The author (s) and their institution (s)

The presence of a peer review process in journal acceptance protocols also adds robustness to the assessment criteria for research papers and hence would indicate a reduced likelihood of publication of poor quality research. Other areas to consider may include authors’ declarations of interest and potential market bias. Attention should be paid to any declared funding or the issue of a research grant, in order to check for a conflict of interest.

II. ABSTRACT: Reading the abstract is a quick way of getting to know the article and its purpose, major procedures and methods, main findings, and conclusions.

- Aim of the study: It should be well and clearly written.

- Materials and Methods: The study design and type of groups, type of randomization process, sample size, gender, age, and procedure rendered to each group and measuring tool(s) should be evidently mentioned.

- Results: The measured variables with their statistical analysis and significance.

- Conclusion: It must clearly answer the question of interest.

III. Introduction/Background section:

An excellent introduction will thoroughly include references to earlier work related to the area under discussion and express the importance and limitations of what is previously acknowledged.

-Why this study is considered necessary? What is the purpose of this study? Was the purpose identified before the study or a chance result revealed as part of ‘data searching?’

-What has been already achieved and how does this study be at variance?

-Does the scientific approach outline the advantages along with possible drawbacks associated with the intervention or observations?

IV. Methods and Materials section:

Full details on how the study was actually carried out should be mentioned. Precise information is given on the study design, the population, the sample size and the interventions presented. All measurements approaches should be clearly stated.

V. Results section:

This section should clearly reveal what actually occur to the subjects. The results might contain raw data and explain the statistical analysis. These can be shown in related tables, diagrams and graphs.

VI. Discussion section:

This section should include an absolute comparison of what is already identified in the topic of interest and the clinical relevance of what has been newly established. A discussion on a possible related limitations and necessitation for further studies should also be indicated.

Does it summarize the main findings of the study and relate them to any deficiencies in the study design or problems in the conduct of the study? (This is called intention to treat analysis).

- Does it address any source of potential bias?

- Are interpretations consistent with the results?

- How are null findings interpreted?

- Does it mention how do the findings of this study relate to previous work in the area?

- Can they be generalized (external validity)?

- Does it mention their clinical implications/applicability?

- What are the results/outcomes/findings applicable to and will they affect a clinical practice?

- Does the conclusion answer the study question?

- Is the conclusion convincing?

- Does the paper indicate ethics approval?

- Can you identify potential ethical issues?

- Do the results apply to the population in which you are interested?

- Will you use the results of the study?

Once you have answered the preliminary and key questions and identified the research method used, you can incorporate specific questions related to each method into your appraisal process or checklist.

1-What is the research question?

For a study to gain value, it should address a significant problem within the healthcare and provide new or meaningful results. Useful structure for assessing the problem addressed in the article is the Problem Intervention Comparison Outcome (PICO) method.

P = Patient or problem: Patient/Problem/Population:

It involves identifying if the research has a focused question. What is the chief complaint?

E.g.,: Disease status, previous ailments, current medications etc.,

I = Intervention: Appropriately and clearly stated management strategy e.g.,: new diagnostic test, treatment, adjunctive therapy etc.,

C= Comparison: A suitable control or alternative

E.g.,: specific and limited to one alternative choice.

O= Outcomes: The desired results or patient related consequences have to be identified. e.g.,: eliminating symptoms, improving function, esthetics etc.,

2- What is the study type (design)?

The study design of the research is fundamental to the usefulness of the study.

In a clinical paper the methodology employed to generate the results is fully explained. In general, all questions about the related clinical query, the study design, the subjects and the correlated measures to reduce bias and confounding should be adequately and thoroughly explored and answered.

Participants/Sample Population:

Researchers identify the target population they are interested in. A sample population is therefore taken and results from this sample are then generalized to the target population.

The sample should be representative of the target population from which it came. Knowing the baseline characteristics of the sample population is important because this allows researchers to see how closely the subjects match their own patients.

Sample size calculation (Power calculation): A trial should be large enough to have a high chance of detecting a worthwhile effect if it exists. Statisticians can work out before the trial begins how large the sample size should be in order to have a good chance of detecting a true difference between the intervention and control groups.

- Is the sample defined? Human, Animals (type); what population does it represent?

- Does it mention eligibility criteria with reasons?

- Does it mention where and how the sample were recruited, selected and assessed?

- Does it mention where was the study carried out?

- Is the sample size justified? Rightly calculated? Is it adequate to detect statistical and clinical significant results?

- Does it mention a suitable study design/type?

- Is the study type appropriate to the research question?

- Is the study adequately controlled? Does it mention type of randomization process? Does it mention the presence of control group or explain lack of it?

- Are the samples similar at baseline? Is sample attrition mentioned?

- All studies report the number of participants/specimens at the start of a study, together with details of how many of them completed the study and reasons for incomplete follow up if there is any.

- Does it mention who was blinded? Are the assessors and participants blind to the interventions received?

- Is it mentioned how was the data analyzed?

- Are any measurements taken likely to be valid?

Researchers use measuring techniques and instruments that have been shown to be valid and reliable.

Validity refers to the extent to which a test measures what it is supposed to measure.

(the extent to which the value obtained represents the object of interest.)

- Soundness, effectiveness of the measuring instrument;

- What does the test measure?

- Does it measure, what it is supposed to be measured?

- How well, how accurately does it measure?

Reliability: In research, the term reliability means “repeatability” or “consistency”

Reliability refers to how consistent a test is on repeated measurements. It is important especially if assessments are made on different occasions and or by different examiners. Studies should state the method for assessing the reliability of any measurements taken and what the intra –examiner reliability was.

3-Selection issues:

The following questions should be raised:

- How were subjects chosen or recruited? If not random, are they representative of the population?

- Types of Blinding (Masking) Single, Double, Triple?

- Is there a control group? How was it chosen?

- How are patients followed up? Who are the dropouts? Why and how many are there?

- Are the independent (predictor) and dependent (outcome) variables in the study clearly identified, defined, and measured?

- Is there a statement about sample size issues or statistical power (especially important in negative studies)?

- If a multi-center study, what quality assurance measures were employed to obtain consistency across sites?

- Are there selection biases?

In a case-control study, if exercise habits to be compared:

- Are the controls appropriate?

- Were records of cases and controls reviewed blindly?

- How were possible selection biases controlled (Prevalence bias, Admission Rate bias, Volunteer bias, Recall bias, Lead Time bias, Detection bias, etc.,)?

Cross Sectional Studies:

- Was the sample selected in an appropriate manner (random, convenience, etc.,)?

- Were efforts made to ensure a good response rate or to minimize the occurrence of missing data?

- Were reliability (reproducibility) and validity reported?

- In an intervention study, how were subjects recruited and assigned to groups?

- In a cohort study, how many reached final follow-up?

- Are the subject’s representatives of the population to which the findings are applied?

- Is there evidence of volunteer bias? Was there adequate follow-up time?

- What was the drop-out rate?

- Any shortcoming in the methodology can lead to results that do not reflect the truth. If clinical practice is changed on the basis of these results, patients could be harmed.

Researchers employ a variety of techniques to make the methodology more robust, such as matching, restriction, randomization, and blinding.

Bias is the term used to describe an error at any stage of the study that was not due to chance. Bias leads to results in which there are a systematic deviation from the truth. As bias cannot be measured, researchers need to rely on good research design to minimize bias. To minimize any bias within a study the sample population should be representative of the population. It is also imperative to consider the sample size in the study and identify if the study is adequately powered to produce statistically significant results, i.e., p-values quoted are <0.05.

4-What are the outcome factors and how are they measured?

- Are all relevant outcomes assessed?

- Is measurement error an important source of bias?

5-What are the study factors and how are they measured?

- Are all the relevant study factors included in the study?

- Have the factors been measured using appropriate tools?

Data Analysis and Results:

- Assessment of the statistical significance should be evaluated:

- Were the tests appropriate for the data?

- Are confidence intervals or p-values given?

- How strong is the association between intervention and outcome?

- How precise is the estimate of the risk?

- Does it clearly mention the main finding(s) and does the data support them?

- Does it mention the clinical significance of the result?

- Is adverse event or lack of it mentioned?

- Are all relevant outcomes assessed?

- Was the sample size adequate to detect a clinically/socially significant result?

- Are the results presented in a way to help in health policy decisions?

- Is there measurement error?

- Is measurement error an important source of bias?

Confounding Factors:

A confounder has a triangular relationship with both the exposure and the outcome. However, it is not on the causal pathway. It makes it appear as if there is a direct relationship between the exposure and the outcome or it might even mask an association that would otherwise have been present.

6- What important potential confounders are considered?

- Are potential confounders examined and controlled for?

- Is confounding an important source of bias?

7- What is the statistical method in the study?

- Are the statistical methods described appropriate to compare participants for primary and secondary outcomes?

- Are statistical methods specified insufficient detail (If I had access to the raw data, could I reproduce the analysis)?

- Were the tests appropriate for the data?

- Are confidence intervals or p-values given?

- Are results presented as absolute risk reduction as well as relative risk reduction?

Interpretation of p-value:

- The p-value refers to the probability that any particular outcome would have arisen by chance. A p-value of less than 1 in 20 (p<0.05) is statistically significant.

- When p-value is less than significance level, which is usually 0.05, we often reject the null hypothesis and the result is considered to be statistically significant. Conversely, when p-value is greater than 0.05, we conclude that the result is not statistically significant and the null hypothesis is accepted.

Confidence interval:

Multiple repetition of the same trial would not yield the exact same results every time. However, on average the results would be within a certain range. A 95% confidence interval means that there is a 95% chance that the true size of effect will lie within this range.

8- Statistical results:

- Do statistical tests answer the research question?

- Are statistical tests performed and comparisons made (data searching)?

- Correct statistical analysis of results is crucial to the reliability of the conclusions drawn from the research paper. Depending on the study design and sample selection method employed, observational or inferential statistical analysis may be carried out on the results of the study.

- It is important to identify if this is appropriate for the study.

- Was the sample size adequate to detect a clinically/socially significant result?

- Are the results presented in a way to help in health policy decisions?

Clinical significance:

Statistical significance as shown by p-value is not the same as clinical significance. Statistical significance judges whether treatment effects are explicable as chance findings, whereas clinical significance assesses whether treatment effects are worthwhile in real life. Small improvements that are statistically significant might not result in any meaningful improvement clinically. The following questions should always be on mind:

-If the results are statistically significant, do they also have clinical significance?

-If the results are not statistically significant, was the sample size sufficiently large to detect a meaningful difference or effect?

9- What conclusions did the authors reach about the study question?

Conclusions should ensure that recommendations stated are suitable for the results attained within the capacity of the study. The authors should also concentrate on the limitations in the study and their effects on the outcomes and the proposed suggestions for future studies.

-Are the questions posed in the study adequately addressed?

-Are the conclusions justified by the data?

-Do the authors extrapolate beyond the data?

-Are shortcomings of the study addressed and constructive suggestions given for future research?

-Is the conclusion convincing?

-Bibliography/References:

Do the citations follow one of the Council of Biological Editors’ (CBE) standard formats?

10- Are ethical issues considered?

If a study involves human subjects, human tissues, or animals, was approval from appropriate institutional or governmental entities obtained?

-Does the paper indicate ethics approval?

-Can you identify potential ethical issues?

Critical appraisal of RCTs: Factors to look for:

- Allocation (randomization, stratification, confounders).

- Follow up of participants (intention to treat).

- Data collection (bias).

- Sample size (power calculation).

- Presentation of results (clear, precise).

- Applicability to local population.